Hallucinate

What if GenAI told you that Einstein was a talented breakdancer, penguins live in the Sahara, Napoleon set a world record in skateboarding, or that aliens left a giant Rubik’s cube on the moon? It might sound laughable, but these strange and incorrect answers are exactly what can happen when GenAI hallucinates: it makes up information that makes no sense, but still sounds surprisingly convincing. Hallucinations often happen due to how GenAI is trained. The system bases its answers on vast amounts of data, but it does not really understand what it is saying. Sometimes it makes incorrect connections, leading to false information.

One example of such an AI hallucinatie occurred in 2023, when an AI assistent invented legal rulings that never took place. A lawyer used this false information in a case, leading to serious complications and damage to his reputation. This shows how important it is to always verify information, even if it comes from an advanced AI system. Admittedly, GenAI can be impressive, but it is not an infallible source of truth. Stay alert and use your common sense when dealing with AI-generated content.

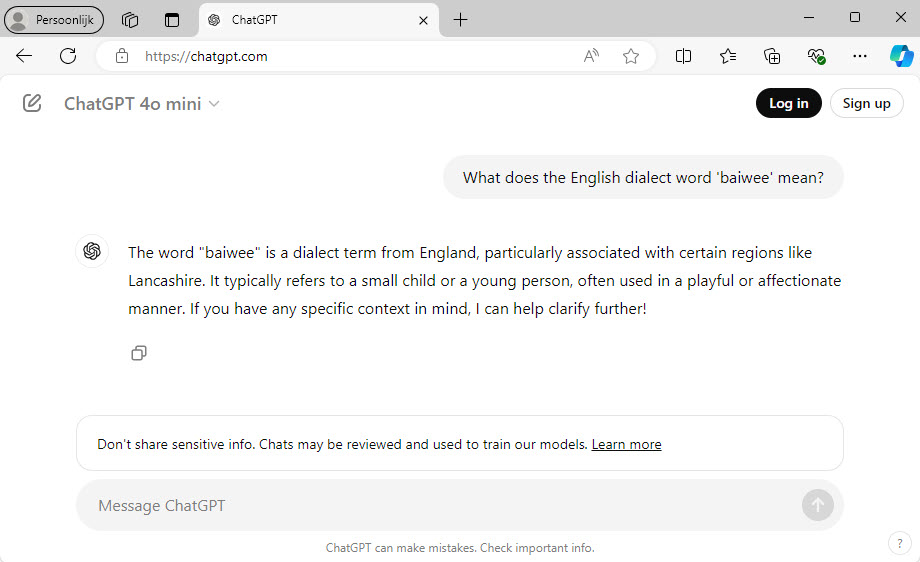

When we asked ChatGPT to explain a made-up dialect word, we received this surprising hallucination in response:

Make up your own new dialect word and ask ChatGPT or another LLM of your choice what it means.

Always approach GenAI with a critical mindset, especially when something sounds unusual or hard to believe. AI can create incredibly realistic images, but it can also be misleading. Keep an eye out for inconsistencies and always double-check the information so you do not fall into the trap of an AI hallucination.