Train your own AI model

You can easily train your own AI model using tools like Google’s Teachable Machine. This is a simple, free, web-based tool that allows you to create your own machine learning model without needing extensive coding knowledge. For instance, you could train a model to recognise different people, all with just a few clicks.

Step 1: Training

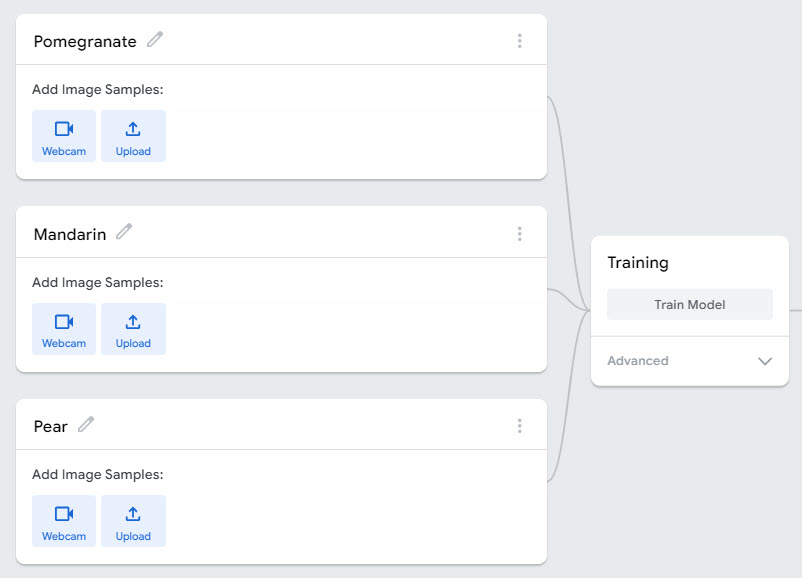

Go to Teachable Machine and start a new project with images. To train the model, you first decide which categories or classes the model needs to recognise. For this demo, we trained a simple model with three types of fruit: a pomegranate, a mandarin, and a pear. Each piece of fruit represents a different class.

Once you have decided on the categories, you need to provide each class with enough material to train the model. Use the webcam to easily capture a large number of images of, for example, an orange. Do the same for the other pieces of fruit.

Have you set up all your classes? Then you can start training the model. Shortly afterwards, you can test your own model on the right-hand side.

Step 2: Testing

You have trained and tested your model, but have you also checked where it could fail? To a computer, an image is just a collection of pixels. The machine does not “know” what a mandarin is. It has learned that a mandarin is an orange, round object against a white background. You can already guess where things might go wrong.

If you give the model another orange fruit, such as an orange, which it has not been trained on, it can easily get confused.

Google’s Teachable Machine is a simple example of AI applications that we already see in everyday life. Think of security systems that grant or deny access based on facial recognition, or healthcare apps that can identify skin conditions.

As you saw in the example, this type of model can also easily make errors. For instance, a facial recognition system in China mistakenly accused a well-known Chinese businesswoman of jaywalking because her face appeared on a bus advertisement. The woman was not crossing the street illegally; the bus simply drove past the camera. In China, facial recognition is increasingly being used to enforce the law, but this incident shows that the technology is certainly not infallible.

Read the full article here: Chinese facial recognition system mistakes a face on a bus for a jaywalker – The Verge